The superintelligence we should be afraid of is us

The superintelligence we should be afraid of is us

There has been a lot of talk about the existential risks of human extermination once we achieve the singularity and create a strong, sentient artificial intelligence. The list of alarmists is long and distinguished: Elon Musk, Bill Gates, Stephen Hawking, et al.

But who is raising the alarm about human superintelligence?

I’m more worried about a person or group of people with 300+ IQs than I am about Skynet. As a friend said, if you’re worried about Skynet rising up and destroying humanity, don’t build Skynet. If you’re worried about your sentient AI getting loose and raising hell, air-gap it. Etc, etc.

But how do you control the systematic enhancement of human beings? What will those human beings be capable of? And what if this is done as part of a concerted, national strategy?

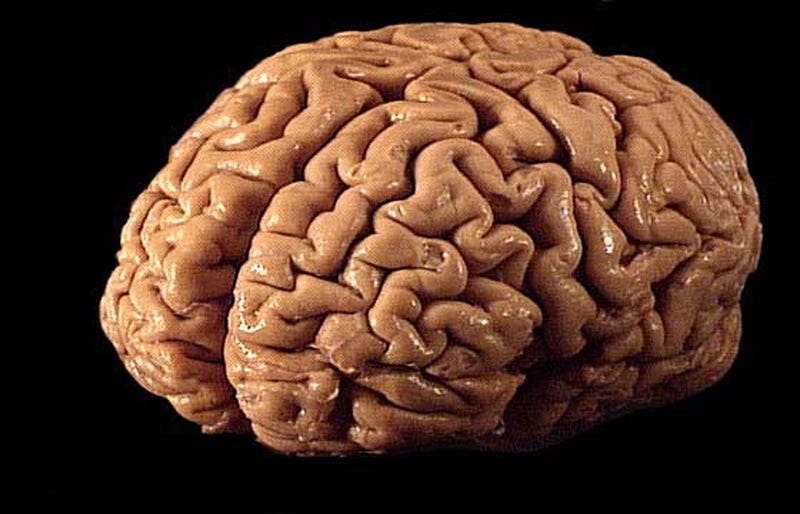

I’m worried about superintelligence. But it’s the next evolution beyond homo sapiens I’m concerned about. Our own species doesn’t have a great record of treating lesser intelligences well.

Without Love Intelligence Is Dangerous; Without Intelligence Love Is Not Enough. — Ashley Montagu

Why worry? Read these:

Physicist Stephen Hsu claims that by tweaking our genome, we could create humans with IQs above 1000.

Scientists have created ‘brainets’ that network two brains together to work on tasks including some rats and these monkeys. The idea is that people could be networked to share abilities among a group.

According to rumors, BGI Shenzhen has collected the DNA from 2000 of the world’s smartest people to determine the alleles which determine human intelligence. Geoffrey Miller writes about this on edge.org.

Chinese scientists have built a giant cloning factory and have cloned humans.

And what happens when we create brain-computer interfaces that allow us to wire enhanced human minds into advanced AI? The wetware worries me more than the hardware.

Thoughts?